Case Study: Testing the Codium.AI VS Code Extension

- Shai Yallin

- Jun 11, 2023

- 6 min read

A couple of months ago I began consulting for CodiumAI, a startup in the space of AI-generated tests. The CodiumAI product is currently available as an IDE extension, both for JetBrains IDEs and for VS Code, and as part of my work with CodiumAI, I was asked to help design and implement a testing strategy for the VS Code extension.

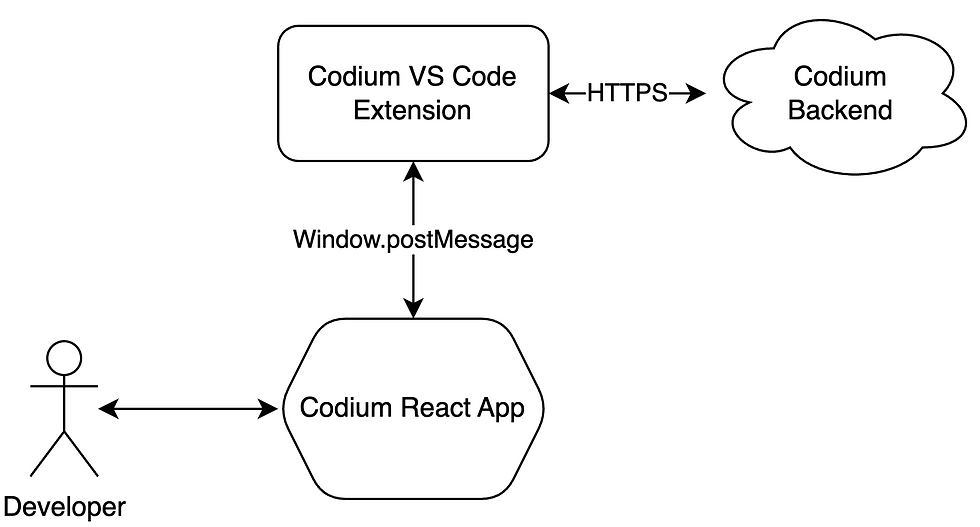

CodiumAI's product analyzes code in the IDE's workspace by sending a symbol (class, function, etc) to the CodiumAI backend server, where it is fed into language models to understand its behavior. It then generates test code for these behaviors, and eventually sends these tests back to the IDE extension. The CodiumAI VS Code extension is built around a React.js web application rendered as a WebView, while all business-logic code and integration with VS Code itself are implemented as code outside the WebView. The extension and the WebView communicate with each other using message passing.

End-to-End Testing

Testing a VS Code extension involves utilizing what is known as the Extension Development Host. An npm library called test-electron starts a specialized instance of VS Code, allowing a suite of Mocha tests to be executed with access to that instance using the VS Code API. The test can interact with VS Code tabs, the workspace, the file system, and execute VS Code commands. However, since the CodiumAI UI WebView is implemented as an IFrame in VS Code, it is inaccessible through the VS Code API, requiring an alternative approach for testing the extension and its UI.

Fortunately, VS Code itself is a web application written in JavaScript, leading me to explore the possibility of using Playwright to drive it. Playwright is a browser testing framework that grew significantly in the last few years, exposing a developer-friendly API and convenient tools for testing on different types of browser, running tests in different environments, and common utilities for testing asynchronous code such as polling.

After conducting some research, I came across OpenVSCode Server, a fork of VS Code that runs as a web server. I created a pipeline that starts OpenVSCode in a Docker container mounting a fixture VSCode workspace containing dummy files. The pipeline then runs a Playwright test that opens a file and clicks the CodiumAI CodeLens to generate tests, accesses the WebView's IFrame, and verifies that it displays tests related to the file that's been opened. In the event of a test failure, an automatically generated trace file and video recording of the failed flow are provided, facilitating the CodiumAI team's investigation of test failures in CI.

However, these tests are slow due to reliance on a Docker container and browser testing. The tests take several seconds to execute, compounded by the CodiumAI model's potential delay of generating tests for up to dozens of seconds. Depending solely on Playwright tests would result in a frustrating feedback cycle and subpar developer experience. I decided that we could do better.

Acceptance Testing

Veteran followers of this blog might be familiar with my approach for achieving both good coverage and quick feedback by using Hexagonal Architecture to instantiate a version of the System Under Test, replacing all IO operations with in-memory Fakes. Employing a Fake enables the creation of fast and integrative Acceptance Tests, which cover the application's behaviors. These tests are designed to break only if a feature is broken, as they treat the entire application as a black box, independent of specific implementation details

Since the CodiumAI extension's WebView UI is a React web application, it can effectively be tested using React Testing Library, which enables the creation of fast UI tests that run on JSDom. We refactored all React-side code responsible for sending or listening to messages to an Adapter, and created an in-memory fake for testing purposes. All UI behavior can be tested using the rich Testing Library toolset, and by using vitest rather than Jest, these tests run in milliseconds. This allows developers to run the tests in watch mode, where any code changes trigger the relevant tests, ensuring immediate feedback.

// vscodeApi.ts

export type VSCodeMsgPoster = {

postMessage: ({ type, value }: { type: string; value: any }) => void;

};

export interface HostAPI {

on<M, D>(message: M, listener: (data: D) => void): void;

emit<M, D>(message: M, value: D): void;

}

export class VSCodeAPI implements HostAPI {

constructor(private msgPoster: VSCodeMsgPoster) {}

on<M,D>(message: M, listener: (data: D) => void): void {

window.addEventListener("message", ({ data }) => {

listener(message, data);

});

}

emit<M,D>(type: M, value: D): void {

this.msgPoster.postMessage({ type, value });

}

}

// memoryHostApi.ts

export class MemoryHostAPI implements HostAPI {

subscriptions: Subscription[] = [];

on<M, D>(

message: M,

listener: (data: D) => void,

): void {

this.subscriptions.push({

pattern: new RegExp(message),

listener: ({ data }) => listener(data as any),

});

}

emit<M>(

message: M,

value: D,

): void {

this.subscriptions

.filter((s) => s.pattern.test(command))

.forEach((s) => s.listener({ command, data }));

}

}

Contract Testing

By using an in-memory fake, we're able to write fast tests, since no IO operations take place during the execution of the test. However, this assumes that the fake is reliable. If we make a mistake when implementing the fake, tests that rely on this implementation might give us false positive results. To gain complete confidence in the Acceptance Test suite, we must complement them with a suite of Contract Tests that runs against both the fake and real implementation of the messaging adapter, verifying that both conform to the same interface. To fully test the real implementation, our test would need access to the IFrame's Window object, but as mentioned earlier, it's an internal implementation detail of the WebView mechanism and is inaccessible to the VS Code instance.

I pondered this problem for some time and played around with different ideas. Eventually I concluded that the only viable approach is to proxy all messages from within the WebView to the test using a WebSocket. The test runs a Socket.io server, accumulating incoming messages in a buffer, and sending messages to the WebSocket. Assertions can be made against the contents of the buffer to make sure that all expected messages have arrived. By setting an environment variable, the extension runs a different JS Bundle, a "fake WebView", specifically for the contract test. This fake WebView connects to the WebSocket server and proxies all messages to and from the messaging adapter, rather than executing the React application.

The Fake WebView

// fake-webview.ts

import { io } from "socket.io-client";

import { PanelMessage, VSCodeAPI } from "./services/vscodeApi";

console.log("starting websocket adapter");

const socket = io("ws://localhost:3030");

const hostApi = new VSCodeAPI(tsvscode);

hostApi.proxyTo((message) => {

console.log('sending message to test', message);

return socket.emit("message", message);

});

socket.on("message", (message: PanelMessage) => {

console.log("got message from test", message);

hostApi.emit(message.type, message.value);

});

// vscodeApi.ts

export class VSCodeAPI implements HostAPI {

constructor(private msgPoster: VSCodeMsgPoster) {}

...

proxyTo<M>(listener: (message: M) => void) {

window.addEventListener("message", ({ data }) => {

listener(data);

});

}

}

The Contract Test suite

suite("Contract tests", () => {

const realDriver = new FakeWebViewDriver();

const memoryDriver = new MemoryHostApiDriver();

[realDriver, memoryDriver].forEach((driver) => {

test(`${driver.name}: init flow`, async () => {

const messages = await driver.receiveMessagesFromExtension();

await waitFor(() =>

expect(messages).to.containSubset([

{command: "clearResults"},

{

command: "initPanel",

data: {

language: "typescript",

},

},

{command: "addTest"},

]),

);

});

test(`${driver.name}: regenerate`, async () => {

const messages = await driver.receiveMessagesFromExtension();

driver.sendMessageToExtension({

type: "regenerateTests",

value: {testName: "fooBarBaz"}

});

await waitFor(() =>

expect(messages).to.containSubset([

{command: "clearResults"},

{command: "addTest"},

]),

);

});

});

});

// Allows the test to interact with the WebView

class FakeWebViewDriver {

name = "VSCode API";

private io: Server;

constructor() {

const httpServer = createServer();

this.io = new Server(httpServer, {

cors: {

origin: "*",

},

});

httpServer.listen(3030);

}

async receiveMessagesFromExtension() {

const hostMessages: { command: string; data: any }[] = [];

this.io.on("connection", (socket) => {

socket.on("message", (message: any) => {

hostMessages.push(message);

});

});

return hostMessages;

}

sendMessageToExtension<M>(m: M) {

this.io.emit("message", m);

}

}

// Allows the test to interact with the Memory API

class MemoryHostApiDriver {

name = "memory API";

constructor(private memoryAPI: MemoryHostAPI) {}

async receiveMessagesFromExtension() {

const hostMessages: HostMessage[] = [];

this.memoryAPI.on("*", (m) => hostMessages.push(m));

return hostMessages;

}

sendMessageToExtension<M>(m: M) {

this.memoryAPI.emit(m.type, m.value);

}

}Since all tests in the Contract Test suite pass against both the driver wrapping our memory fake, and against the driver that connects to the real implementation via the WebSocket, it's safe to assume that both of them indeed conform to the same interface. If a bug arises that is caused by mismatching behavior, it can be reproduced by writing a new failing test to this suite, and then fixed by modifying either the fake or real implementation to make the test pass.

Summary

I'd like to extend my thanks to the CodiumAI team for allowing me to blog about my experience with them. It was a pleasure working with a team that understand the importance of creating a fast and effective test suite early in the development process - even though their own models are as-of-yet incapable of creating such sophisticated tools and infrastructure. I'm certain that this investment early-on will pay itself back tenfold within the next year or two.

The combined use of blazingly fast Acceptance Tests written with Testing Library against an in-memory fake, a Contract Test suite that asserts that the fake and real adapters conform to the same interface, and a Playwright-based End-to-End Test to ensure proper integration of all components allows CodiumAI to achieve extensive test coverage without sacrificing developer experience or correctness. The method of proxying window messages to the test using a WebSocket is a great takeaway from this project and can be applied to other platforms that utilize web views, such as mobile or desktop applications, or when testing IFrame-based micro-frontends.

Comments